Learn how businesses can run afoul of privacy laws with generative AI chatbots like ChatGPT. Plus, the job market for cyber analysts and engineers looks robust. Also, cybercrooks lost a major resource with the Genesis Market shutdown. In addition, the six common mistakes cyber teams make. And much more!

Dive into six things that are top of mind for the week ending April 7.

1 - UK regulator: How using ChatGPT can break data privacy rules

Businesses can inadvertently violate data privacy laws and regulations when they use or develop generative AI chatbots like ChatGPT, the U.K. government said this week, the latest warning about the legal risks of misusing this artificial intelligence technology.

For example, the Italian government last week temporarily blocked ChatGPT, citing privacy concerns. Meanwhile, the EU is mulling passing rules to regulate the use of AI in general and of AI chatbots in particular, as are many countries. It’s clear that organizations must closely monitor the global regulatory and legal landscape around generative AI as they ponder using this technology.

But back to the U.K. How can ChatGPT business users reduce their risk of getting into data-privacy hot water there? From the start, companies should fully understand their “data protection obligations” and approach data protection “by design and by default.” So said Stephen Almond, Executive Director, Regulatory Risk, at the U.K. Information Commissioner’s Office (ICO) in a blog post.

“This isn’t optional – if you’re processing personal data, it’s the law,” he wrote. “Data protection law still applies when the personal information that you’re processing comes from publicly accessible sources.”

Almond’s recommendations for organizations using generative AI chatbots that process personal data include:

- Understand your “lawful basis” for processing personal data, such as consent

- Establish whether you are a controller, joint controller or a processor of the personal data being handled, and, based on that, grasp your obligations

- Prepare a data protection impact assessment (DPIA) and update it as necessary

- Ensure transparency by disclosing information about the data processing methods

- Consider and mitigate data security risks, such as leakage, data poisoning, model inversion and membership inference

- Limit the amount of data you collect and process to what’s necessary

- Be ready to respond to people’s requests for data access, rectification, erasure and more

For more information about data privacy concerns around the use of ChatGPT and generative AI in general:

- “Italy became the first Western country to ban ChatGPT. Here’s what other countries are doing” (CNBC)

- “ChatGPT Bug Exposed Some Subscribers' Payment Info” (CNET)

- “Amid ChatGPT furor, U.S. issues framework for secure AI” (Tenable)

- “ChatGPT Has a Big Privacy Problem” (Wired)

- “ChatGPT is a data privacy nightmare, and we ought to be concerned” (Ars Technica)

VIDEOS

Tenable CEO Amit Yoran discusses recent spy balloons and AI on CNBC (Tenable)

U.K.’s NCSC Raises Privacy Concerns about ChatGPT (Tenable)

2 - NIST unveils AI resource center

And continuing with the ChatGPT and generative AI topic, here’s another resource with guidance and information for organizations on how to avoid AI privacy and security pitfalls.

The U.S. National Institute of Standards and Technology (NIST) has just launched its “Trustworthy and Responsible AI Resource Center,” which the agency describes as “a one-stop-shop for foundational content, technical documents and AI toolkits.”

The center’s goal is to help AI system designers, developers and users in government, the private sector and academia adopt NIST’s “AI Risk Management Framework,” launched in January of this year.

For more information:

- “As ChatGPT Fire Rages, NIST Issues AI Security Guidance” (Tenable)

- “Machine Generated Text: A Comprehensive Survey of Threat Models and Detection Methods” (American University and University of Ottawa researchers)

- “Citing Risks to Humanity, AI & Tech Leaders Demand Pause on AI Research” (InformationWeek)

- “OpenAI’s ChatGPT and GPT-4 Used as Lure in Phishing Email, Twitter Scams to Promote Fake OpenAI Tokens” (Tenable)

- “Europol sounds alarm about criminal use of ChatGPT, sees grim outlook” (Reuters)

VIDEOS

Introduction to the NIST AI Risk Management Framework (NIST)

Anatomy of a Threat: GPT-4 and ChatGPT Used as Lure in Phishing Scams Promoting Fake OpenAI Tokens (Tenable)

3 - CompTIA: Cybersecurity analysts and engineers in high demand

Good news on the job front for cybersecurity analysts and engineers.

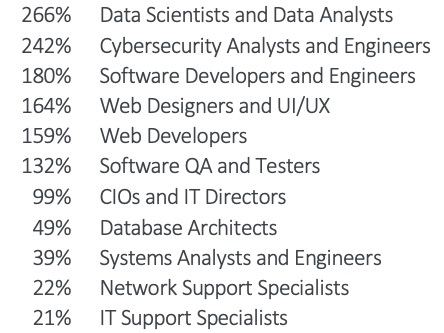

Demand for these cyber pros in 2023 ranks second among all tech occupations with a projected 5.2% growth in jobs. That’s according to CompTIA’s “State of the Tech Workforce Report” for 2023. Only data scientists and analysts ranked higher with a 5.5% projected job growth.

But that’s not all. The long-term job outlook also looks rosy: Job growth rate for cybersecurity analysts and engineers over the next 10 years is projected at a whopping 242% above the U.S. national average – again second only to data scientists and analysts.

It’s estimated that there are almost 179,000 cybersecurity analysts and engineers in the U.S. right now, a number expected to grow to almost 234,000 in 2033, according to the CompTIA report.

Projected Tech Job Growth Above the National Average Over the Next 10 Years

(Source: CompTIA’s “State of the Tech Workforce Report” March 2023)

Data for the 146-page report comes from a variety of sources, including the U.S. Bureau of Labor Statistics, the U.S. Bureau of Economic Analysis and labor market analytics company Lightcast.

To get more details, you can read the full report, highlights and a blog post about the rising demand for cybersecurity analysts.

4 - Genesis Market, a notorious cybercrime mart, gets dismantled

Cybercriminals have one less weapon in their arsenal.

An international law enforcement operation this week shut down hacker marketplace Genesis Market, which primarily peddled digital identity data – such as login credentials for financial accounts – stolen from about 1.5 million computers globally.

As a “prolific access broker,” the five-year old Genesis Market was “a key enabler of ransomware,” the U.S. Department of Justice said in a statement in which it called the law enforcement operation “unprecedented.”

During its lifetime, Genesis Market facilitated cyberattacks by offering access to about 80 million account-access credentials to financial institutions, critical infrastructure providers, government agencies and other organizations, the DOJ said.

(Source: Europol)

Genesis Market stood apart in several ways. For example, it didn’t just offer login credentials. It sold what it called “bots,” which collected cookies, saved logins and autofill form data from a compromised device in real time, and even provided buyers with a custom browser designed to mimic the victim’s. That way, the fraudster would log into the victim’s accounts without triggering red flags such as an unfamiliar log-in location and a different browser fingerprint, according to Europol.

The U.S. Treasury Department, which said it believes Genesis Market operated out of Russia, called it “a key resource for cybercriminals” and one of the largest and most prominent cybercrime marketplaces in the world.

The sting, which was dubbed “Operation Cookie Monster,” involved law enforcement agencies from 17 countries and was led by the U.S. Federal Bureau of Investigation and the Dutch National Police. As of Wednesday, it had netted 119 arrests and 208 property searches.

For more information:

- “Notorious stolen credential warehouse Genesis Market seized by FBI” (The Register)

- “FBI seizes Genesis Market, a notorious hacker marketplace for stolen logins” (TechCrunch)

- “FBI Seizes Bot Shop ‘Genesis Market’ Amid Arrests Targeting Operators, Suppliers” (Krebs on Security)

- “Operation Cookie Monster: Feds seize ‘notorious hacker marketplace’” (Ars Technica)

VIDEO

Cybercrime website Genesis Market shut down in global law enforcement crackdown (BBC News)

5 - 3CX softphone desktop app reportedly compromised

A softphone desktop application for Windows and MacOS from 3CX, which makes a Voice over Internet Protocol (VoIP) Private Branch Exchange (PBX) system used by over 600,000 organizations, has reportedly been trojanized as part of a supply chain attack.

Check out the blog post from Tenable Senior Staff Research Engineer Satnam Narang to get all the details about this incident, in which the product was reportedly compromised as part of an “active intrusion campaign” aimed at 3CX customers.

For more information:

- “3CX Breach Widens as Cyberattackers Drop Second-Stage Backdoor” (Dark Reading)

- “SmoothOperator Campaign Trojanizes 3CXDesktopApp” (SentinelOne)

- “3CX users under DLL-sideloading attack” (Sophos)

- “3CX supply chain attack: What do we know?” (Help Net Security)

6 - Six critical and common cyber mistakes

And is your cybersecurity team making these six frequent blunders recently identified by NIST? Check them out.

- Assuming your users are clueless about cybersecurity, which can create an adversarial relationship between them and the cyber team

- Failing to tailor your security-awareness message to your different audiences, which harms their engagement

- Overlooking how software that provides a poor user experience can create security threats by confusing users

- Having excessive security by default, which creates a cyber environment that’s too rigid and restrictive

- Relying on negative reinforcement methods to promote cybersecurity practices among users

- Downplaying the importance of collecting user metrics to concretely track how much of the security-awareness information users are retaining

Get more details, including suggestions for remedying these mistakes, by reading the NIST research article "Users are not stupid: Six cyber security pitfalls overturned" and the accompanying infographic.