Find out why cyber teams must get hip to AI security ASAP. Plus, check out the top risks of ChatGPT-like LLMs. Also, learn what this year’s Verizon DBIR says about BEC and ransomware. Plus, the latest trends on SaaS security. And much more!

Dive into six things that are top of mind for the week ending June 9.

1 – Forrester: You must defend AI models starting “yesterday”

Add another item to cybersecurity teams’ packed list of assets to secure: AI models. Why? Their organizations will likely begin building AI models in-house imminently – if they’re not already doing so.

That’s the forecast provided by Forrester Research Principal Analyst Jeff Pollard and Senior Analyst Allie Mellen in their recent blog “Defending AI Models: From Soon To Yesterday.”

The AI models in question are so-called fine-tuned models – those which organizations will build and customize internally using corporate data. Fine-tuned models are not to be confused with commercially available, generalized models from vendors like Microsoft or Google.

“Fine-tuned models are where your sensitive and confidential data is most at risk,” Pollard and Mellen wrote.

“Unfortunately, the time horizon for this is not so much ‘soon’ as it is ‘yesterday.’ Forrester expects fine tuned-models to proliferate across enterprises, devices, and individuals, which will need protection,” they added.

According to the analysts, potential attacks against these models include:

- Model theft, which will compromise the competitive advantage of the affected organization’s business model and therefore affect revenue generation

- Inference attacks, in which hackers find a way for a model to inadvertently leak sensitive data

- Data poisoning, in which attackers tamper with a model’s data to get it to generate inaccurate or unwanted results

The analysts expect that security products will emerge to help cyber teams protect AI models via bot management, API security, AI/ML security tools and prompt engineering.

For more details, read the Forrester Research blog “Defending AI Models: From Soon To Yesterday.”

To get more information about securing AI models:

- “How secure are your AI and machine learning projects?” (CSO Online)

- “Securing Artificial Intelligence” (ETSI)

- “OWASP AI Security and Privacy Guide” (OWASP)

- “Securing Machine Learning Algorithms” (ENISA)

- “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations” (U.S. National Institute of Standards and Technology)

VIDEOS

How To Secure AI Systems (Stanford University)

Securing AI systems at scale (AI Infrastructure Alliance)

2 – OWASP ranks top security risks for AI LLMs

And staying on the topic of AI model security, the OWASP Foundation has published a list of the top 10 security risks involved in deploying and managing large language models (LLMs), like the popular generative AI chatbot ChatGPT.

Here’s the list, which is aimed at developers, designers, architects, managers and organizations working on LLMs:

- Prompt injections, aimed at bypassing an LLM’s filters or manipulating it into violating its own controls to perform malicious actions

- Data leakage, resulting in the accidental disclosure, via an LLM’s responses, of sensitive data, proprietary algorithms or other confidential information

- Inadequate sandboxing, where an LLM is improperly isolated when it has access to external resources or sensitive systems

- Exploitation of LLMs, to get them to execute code, commands or actions that are unauthorized or malicious

- Server-side request forgery (SSRF) attacks, aimed at getting an LLM to grant unintended requests or access restricted resources

- Excessive reliance on content generated by LLMs that isn’t reviewed and vetted by humans

- Misalignment between an LLM’s objectives and behavior, and its intended use case

- Lack of access controls and authentication

- Exposure of error messages and debugging information that could reveal sensitive information

- Malicious manipulation of the LLMs training data

The list is still at a draft stage. OWASP encourages interested community members to participate in the ongoing work of advancing and refining the “Top 10 for Language Model Applications” project.

To get more details, read the project’s announcement, go to the project’s home page and check out the list of risks.

For more information about using generative AI tools like ChatGPT securely and responsibly, check out these Tenable blogs:

- “CSA Offers Guidance on How To Use ChatGPT Securely in Your Org”

- “As ChatGPT Concerns Mount, U.S. Govt Ponders Artificial Intelligence Regulations”

- “As ChatGPT Fire Rages, NIST Issues AI Security Guidance”

- “A ChatGPT Special Edition About What Matters Most to Cyber Pros”

- “ChatGPT Use Can Lead to Data Privacy Violations”

VIDEOS

Anatomy of a Threat: GPT-4 and ChatGPT Used as Lure in Phishing Scams Promoting Fake OpenAI Tokens

Tenable Cyber Watch: Is AI Vulnerability Management On Your Radar Screen?

3 – Verizon DBIR warns about BEC and ransomware

Cybersecurity teams take note: The threats from business email compromise (BEC) and ransomware attacks have intensified over the past year.

So says the Verizon 2023 Data Breach Investigations Report (DBIR), which analyzed around 16,000 incidents and 5,200 breaches between November 1, 2021, and October 31, 2022.

Let’s dive into some details about BEC scams, in which attackers dupe an employee into disclosing sensitive financial information or transferring money to them:

- These fraudulent schemes, also known as “pretexting,” almost doubled in frequency and now represent more than half of all social engineering incidents

- The median amount stolen via BEC has grown to over $50,000 per incident in the past two years

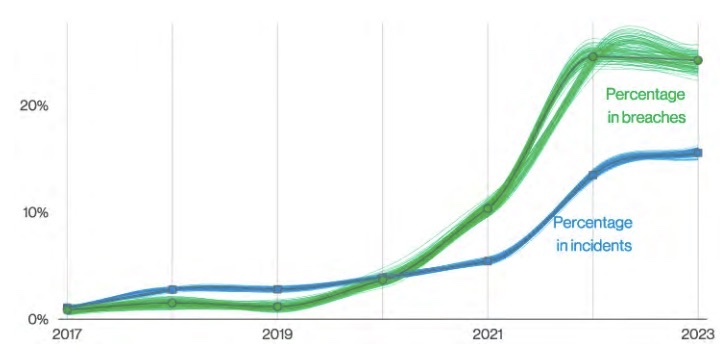

Regarding ransomware, here are some key findings:

- Ransomware accounted for 24% of all breaches, as ransomware operators boost automation and efficiency

- The median cost of a ransomware attack more than doubled to $26,000 over the past two years

- There have been more ransomware attacks in the past two years than in the prior five years combined

Ransomware Action Variety Overtime

(Source: Verizon 2023 Data Breach Investigations Report, June 2023)

Other interesting findings include:

- The “human element” played a part in 74% of all breaches, meaning that someone made an error or fell for a social engineering scam, or that an attacker used stolen credentials

- In the category of errors that led to breaches, 21% of them were misconfigurations, most of which were caused by developers and system administrators

- External actors were involved in 83% of breaches

- The motivation behind 95% of attacks was financial

- The top three techniques for breaching a victim’s IT environment were: using stolen credentials; phishing; and exploiting vulnerabilities

To get all the details from the DBIR, which was released this week and is now in its 16th year, visit its main resources page, check out its announcement, download the full report and watch this video:

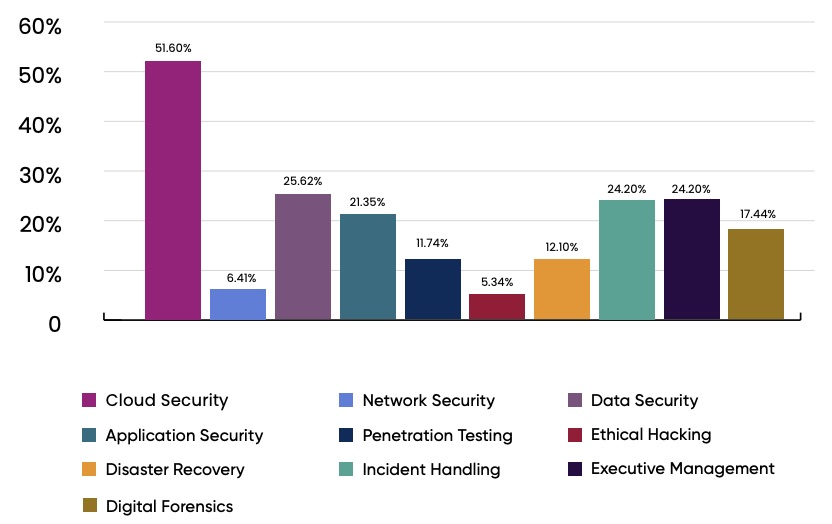

4 – Cloud security tops CISOs’ concerns

CISOs worry the most about their organizations’ cloud security. And not only that. Among their cybersecurity teams’ functions, cloud security also ranks first in skills shortage.

Those are two of the findings from the EC-Council’s “2023 Certified CISO Hall of Fame Report” for which the cybersecurity education and training organization polled 281 C-level security executives from around the world and from multiple industries.

When respondents were asked to name their greatest areas of concern, cloud security topped the list with 49.1% of polled CISOs choosing it. Data security ranked second (34.5%), followed by cybersecurity governance (32.7%).

Greatest Areas of Concern in Cybersecurity

(Source: EC-Council’s “2023 Certified CISO Hall of Fame Report”. The 281 respondents could choose more than one answer.)

Regarding the areas with the biggest gap between their organizations’ needs and the available talent, cloud security was the runaway top pick again, chosen by 51.6% of respondents. Data security ranked a distant second.

Gaps between needs and talents

(Source: EC-Council’s “2023 Certified CISO Hall of Fame Report”. The 281 respondents could choose more than one answer.)

To get more details, read the report’s announcement and check out the full report.

For more information about the challenges CISOs face today:

- “5 Challenges CISOs Are Facing in 2023”(Infosecurity Magazine)

- “The modern CISO: Today’s top cybersecurity concerns and what comes next” (Cybersecurity Dive)

- “CISOs Share Their 3 Top Challenges for Cybersecurity Management” (Dark Reading)

- “Risk management challenges for CISOs and how to proceed” (SC Magazine)

VIDEOS

Five challenges you face as a CISO (Eric Cole)

Transparency from a CISO's Perspective (SANS Institute)

5 – CSA looks at evolving SaaS security landscape

The Cloud Security Alliance has taken a revealing snapshot of trends in software-as-a-service security.

For “The Annual SaaS Security Survey Report: 2024 Plans and Priorities,” released this week, the CSA polled 1,130 IT and security pros and found, among other things, that:

- 58% say their current SaaS security tools only cover about half or less of their SaaS applications

- As SaaS adoption spreads within organizations, security leaders are shifting their SaaS security roles from hands-on controllers to governance overseers, delegating security management to stakeholders who “own” specific apps and are closer to their daily use

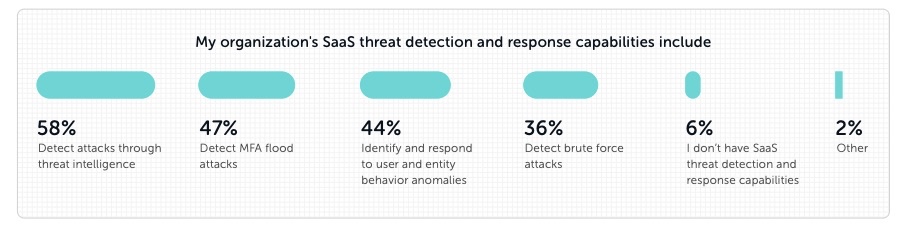

- The scope of SaaS security keeps broadening, and now includes misconfigurations, third-party app-to-app access, device-to-SaaS risk management, identity and access governance and threat detection and response

(Source: Cloud Security Alliance’s The Annual SaaS Security Survey Report: 2024 Plans and Priorities, June 2023)

- 66% have boosted their spending in SaaS apps, and 71% have increased their investment in SaaS security tools

- 55% of organizations suffered a SaaS security incident in the past two years, up 12% from last year’s survey

(Source: Cloud Security Alliance’s The Annual SaaS Security Survey Report: 2024 Plans and Priorities, June 2023)

To get more details about the report, sponsored by Tenable partner Adaptive Shield, read the report’s announcement and download the full report.

For more information about SaaS security specifically, and cloud security in general, check out these Tenable blogs:

- “Organizations struggle with SaaS data protection”

- “Cloud Security Basics: Protecting Your Web Applications”

- “Don’t downplay SaaS security”

- “Revisiting CSA’s top SaaS governance best practices”

- “Identifying XML External Entity: How Tenable.io WAS Can Help”

VIDEOS

Tenable Cloud Security Coffee Break: Web app security

2023 will be the year of SaaS security | Tenable at Web Rio Summit 2023

6 – A guide for securing remote access software

Want to sharpen how your organization detects and responds to threats against remote access software? Check out the new “Guide to Securing Remote Access Software” jointly published this week by the U.S. and Israeli governments.

The guide outlines common exploitations, along with tactics, techniques and procedures used in this type of software compromise. Threat actors often abuse remote access software to unleash stealthy “living off the land” attacks – recently in the spotlight as the preferred method of Volt Typhoon, a hacking group backed by the Chinese government.

“Remote access may be a useful option for many organizations, but it also could be a threat vector into their systems,” said Eric Chudow, System Threats and Vulnerability Analysis Subject Matter Expert at the U.S. National Security Agency.

Attackers target remote access software for various reasons, including:

- It doesn’t always trigger response defenses from security tools

- It can allow attackers to bypass software management control policies, as well as firewall rules

- It can allow attackers to manage multiple, simultaneous intrusions

To get all the details, check out the joint alert, the announcement and the 11-page guide from the NSA, CISA, FBI, Multi-State Information Sharing and Analysis Center (MS-ISAC) and Israel National Cyber Directorate (INCD).