Learn about the promise and peril of generative AI for software development – and how it makes business execs both happy and fearful. Plus, do cyber teams underestimate risk? Also, NIST has a new AI working group – care to join? And much more!

Dive into six things that are top of mind for the week ending July 7.

1 – McKinsey: Generative AI will empower developers, but mind the risks

Generative AI tools like ChatGPT will supercharge software developers’ productivity, but organizations must be aware of and mitigate the AI technology’s security and compliance risks.

So says a new study from McKinsey, based on a weeks-long test involving 40 McKinsey developers who completed tasks with and without the help of generative AI tools.

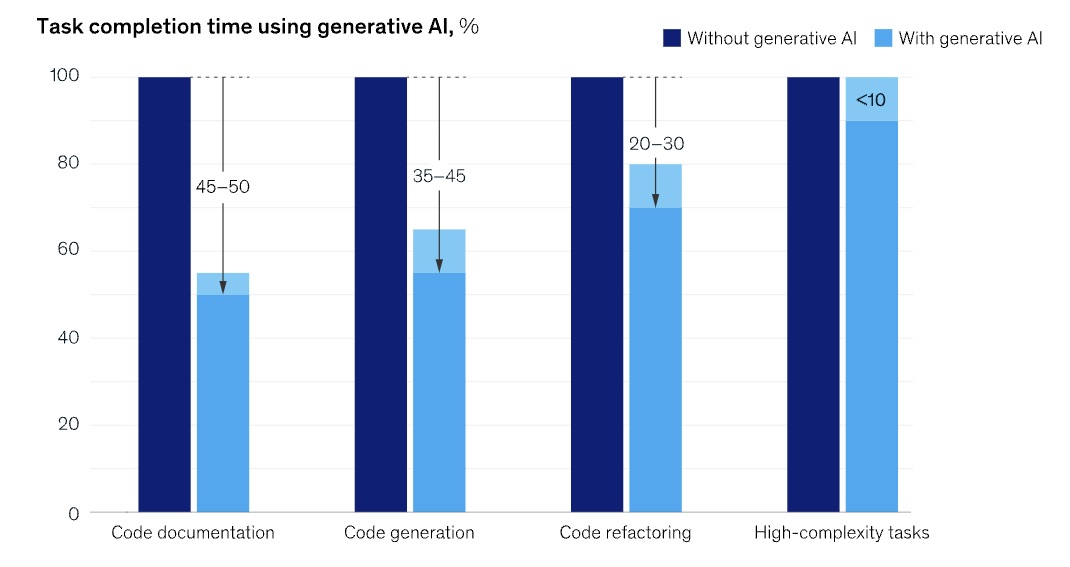

The main takeaway: Developers worked significantly more quickly when using generative AI tools for regular tasks such as code generation, optimization and documentation.

(Source: McKinsey’s “Unleashing developer productivity with generative AI” study, June 2023)

“Our latest empirical research finds generative AI-based tools delivering impressive speed gains for many common developer tasks,” reads the study, which also points out that the productivity boost drops when the tasks are more complex.

However, with the increased speed to write and update code also comes a heightened risk of security and compliance missteps. Thus, organizations need to put in governance guardrails to prevent issues like:

- Data privacy violations

- Legal and regulatory infringement

- AI malfunctions due to malicious tampering

- Inadvertent use of copyrighted content or code

To get more details, read the full study “Unleashing developer productivity with generative AI.”

For more information about ChatGPT, generative AI and cybersecurity:

- “How to Maintain Cybersecurity as ChatGPT and Generative AI Proliferate” (Acceleration Economy)

- “ChatGPT, the AI Revolution, and the Security, Privacy and Ethical Implications” (SecurityWeek)

- “The Fusion of AI and Cybersecurity Is Here: Are You Ready?” (CompTIA)

- “How Generative AI Is Changing Security Research” (Tenable)

- “The New Risks ChatGPT Poses to Cybersecurity” (Harvard Business Review)

2 – Kroll: Cyber teams are overconfident

Cybersecurity teams tend to overestimate their capacity to defend their organizations from cyberattacks.

That’s the main finding from Kroll’s “2023 State of Cyber Defense: The False-Positive of Trust” report, based on a global survey of 1,000 senior infosec decision-makers from organizations with revenue between $50 million and $10 billion.

“Our findings reveal a concerning inconsistency between organizations’ level of trust in their own cybersecurity status and their readiness to achieve true cyber resilience,” reads a report summary.

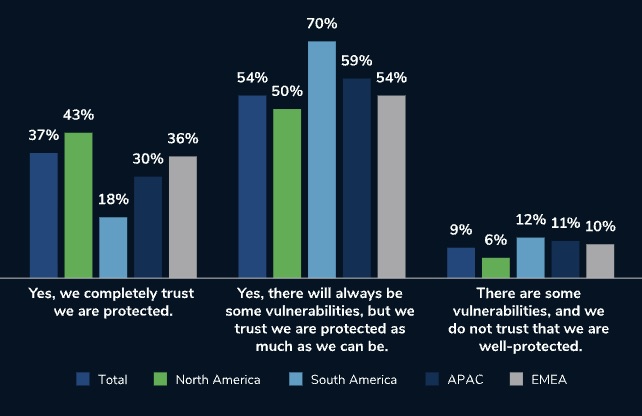

Specifically, the study found that 37% of respondents “completely” trust that their company is protected and able to defend itself against all cyberattacks, while another 54% feel they’re as protected as possible. However, organizations polled experienced an average of five major cybersecurity incidents in the past year.

Do you trust your organization’s cybersecurity defenses to successfully defend against most/all cyberattacks?

(Source: Kroll’s “2023 State of Cyber Defense: The False-Positive of Trust,” June 2023)

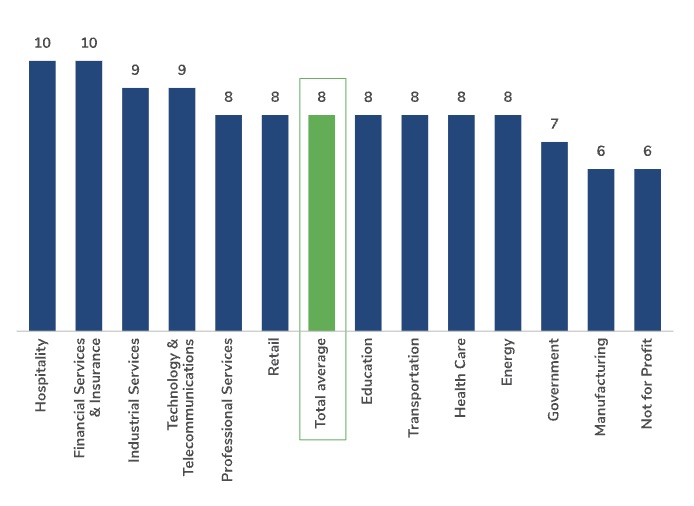

Significantly, the report found a direct correlation between the number of cybersecurity platforms deployed and the number of cybersecurity incidents an organization experienced – the more platforms, the higher the number of incidents. Organizations polled use on average eight platforms.

How many cybersecurity platforms does your organization use regularly to monitor cybersecurity alerts?

(Source: Kroll’s “2023 State of Cyber Defense: The False-Positive of Trust,” June 2023)

To get all the details, read the report’s announcement, check out a summary and download the full report.

For more information about the issue of overconfidence among cyber teams:

- “Cybersecurity teams are overconfident of their ability to deal with threats” (Beta News)

- “Cybersecurity Fails and How to Prevent Them” (InformationWeek)

- “Appraising the Manifestation of Optimism Bias and Its Impact on Human Perception of Cyber Security” (Howard University)

3 – KPMG: Generative AI excites and scares execs

Oh ChatGPT, the maelstrom of emotions you ignite in us!

Business executives are simultaneously thrilled and concerned about their organizations’ use of generative AI tools like ChatGPT. And their main areas of concern are – surprise, surprise – in the realms of security and compliance.

That’s according to a recent KPMG survey of 225 U.S. executives from businesses with $1 billion-plus in revenue. The survey found that:

- 65% of respondents expect generative AI to have a “high or extremely high impact” on their organizations in the coming three to five years

- Cybersecurity (81%) and data privacy (78%) rank as respondents’ top two concerns

Notably, most polled organizations are at early stages of developing a risk management strategy for generative AI. Specifically, with regard to risk evaluation and mitigation:

- only 6% have a dedicated team in place

- 25% are working on it

- 47% are in the risk evaluation stage

- 22% haven’t yet started

Equally concerning, only 5% of polled companies have a mature program for responsible AI governance, while almost 20% are building one. The rest intend either to create one but haven’t started (49%) or don’t believe they yet need one (27%).

To get more details, you can read a survey summary or the full report.

For more information about using generative AI securely in the workplace:

- “Ready to Roll out Generative AI at Work? Use These Tips to Reduce Risk” (ReWorked)

- “ChatGPT is creating a legal and compliance headache for business” (ComputerWeekly)

- “5 ways to explore the use of generative AI at work” (ZDNet)

- “Generative AI at work: 3 steps to crafting an enterprise policy” (CIO Dive)

- “5 methods to adopt responsible generative AI practice at work” (CIO Magazine)

4 – NIST unveils generative AI working group

Highlighting the urgency around curbing the risks of tools like ChatGPT, the U.S. National Institute of Standards and Technology (NIST) has launched the Public Working Group on Generative AI.

The group will focus on AI systems that are capable of generating content, including text, videos, images, music and computer code. Its charter is to build upon NIST’s AI Risk Management Framework and help organizations develop, deploy and use generative AI securely and responsibly.

The group’s goals include:

- Short term: Garther input and guidance on using the framework to support the development of generative AI technologies while addressing related risks

- Medium term: Help NIST with its efforts on testing, evaluating and measuring related to generative AI

- Long term: Facilitate the development of generative AI technologies for the top challenges in areas like healthcare and climate change

If you’re interested in joining the NIST Generative AI Public Working Group, you have until July 9 to submit this form.

To get more details about the working group and other NIST AI-related initiatives, read the group’s announcement, check out the framework’s main page and watch these videos:

NIST Conversations on AI | Generative AI | Part One

NIST Conversations on AI | Generative AI | Part Two

5 – CISA: Hackers again exploit known Telerik vulns in fed agencies

Say it once again with feeling: Fix critical known vulnerabilities.

Back in March, we reported on an eye-opening advisory in which CISA detailed how attackers had breached the web server of an unnamed U.S. federal agency by exploiting known, years-old vulnerabilities.

Well, CISA recently updated that advisory, and the news isn’t encouraging: In April, CISA discovered that another federal agency got hacked by APT attackers by exploiting one of those vulnerabilities – specifically one disclosed way back in 2017.

The original suspicious activity in the network of the first breached agency was detected between November 2022 and January 2023, although the hack may have happened as far back as August 2021.

Specifically, the attackers exploited a .NET deserialization vulnerability (CVE-2019-18935) in Progress’ Telerik UI for ASP.NET AJAX located in the agency’s Microsoft Internet Information Services (IIS) web server.

The vulnerability exploited in the second agency’s breach was CVE-2017-9248, also in an IIS Server’s Telerik UI for ASP.NET AJAX.

As we suggested back in March, check out the “2022 Threat Landscape Report” from Tenable’s Security Response Team (SRT), which provides detailed insights and recommendations regarding the importance of fixing known, critical vulnerabilities on a timely basis.

“We cannot stress this enough: Threat actors continue to find success with known and proven exploitable vulnerabilities that organizations have failed to patch or remediate successfully,” the Tenable report reads.

To get more details, you can check out Tenable’s full “Threat Landscape Report,” read a Tenable SRT blog post and watch an on-demand webinar.

6 – U.K.’s NCSC spotlights cyberthreats against law firms

With almost 75% of the U.K.’s biggest law firms having experienced cyberattacks, the U.K. National Cyber Security Centre has just published a report aimed at helping the legal sector better understand how it’s being targeted and ways to improve its cyber resilience.

“Organisations in the legal sector routinely handle large amounts of money and highly sensitive information, which makes them attractive targets for cyber criminals,” wrote NCSC CEO Lindy Cameron in the report.

Some of the NCSC recommendations include:

- Ensure that senior leaders are engaged and knowledgeable about cyber risk

- Make a baseline assessment of your organization’s cyber posture

- Invest in staff training and awareness

To get all the details, check out the 24-page document titled “Cyber Threat Report: UK Legal Sector.”

For more information about cybersecurity in the legal sector:

- “It's Open Season on Law Firms for Ransomware & Cyberattacks” (Dark Reading)

- “Cybersecurity is a nemesis for law firms” (American Bar Association)

- “Cybersecurity for law firms” (British Legal IT Forum)

- “Legal Industry Faces Double Jeopardy as a Favorite Cybercrime Target” (Dark Reading)

- “Cybersecurity for Law Firms: What Legal Professionals Should Know” (American Bar Association)